SYNG.

ROLE: Experience Designer, Front-end Developer

TIMELINE: 14 weeks

TEAM: Self

SKILLS: Machine Learning, Web Development (JavaScript, HTML, CSS)

Named after the Greek word root syn meaning “with” or “together”, Syng is a ear training web application designed to improve its users’ singing skills and note recognition by visualizing pitch.

How can visual feedback benefit auditory learning?

BACKGROUND

A common hurdle for novice singers and even advanced vocalists is learning to stay on pitch. An inexperienced singer may hear a note but will be unable to reproduce it precisely. However, if singers are not familiar with musical notes or even hearing themselves sing, how can they identify their mistakes?

Syng was created to provide approachable music education by using the combination of sound and visualization to enhance learning.

RESEARCH & IDEATION

Syng is inspired by the neurological condition Synesthesia, or cross-sensory perception. Those with synesthesia have involuntary experiences such as seeing colors when reading numbers or tasting distinct flavors when hearing certain notes. Due to these sensory pairings and associations, Synesthetes often have enhanced memories. Scientific literature has indicated that adding visual feedback as a supplement to auditory information can strengthen learning processes in the general population as well.

I researched existing musical devices that combine sound and visuals, such as guitar tuners, which provide graphical displays that indicate how far the user’s string is from playing a perfect note. Some singing audio-visual software exists as well, but were often overcomplicated with features geared towards advance vocalists or for use only in scientific settings.

Whether from a tuner display or verbal conversation with a teacher, one key element stood out: users require immediate feedback on HOW to make adjustments.

DEVELOPMENT

MAPPING MUSIC INTERACTION

PROTOTYPES

I experimented with different visualizations of pitch matching. I wanted to represent a given note as a circle and the singer’s voice as another circle. The relationship between the two circles would indicate correct pitch. My goal was to find the most effective visualization that illustrated immediate singing errors.

Correct pitch increase diameter of given pitch

Harmony produces color mixing

Flat pitch increases width of voice circle

Correct pitch matches diameter of given pitch

I decided that keeping the given note-circle static and having the singer’s voice-circle move up and down was the clearest visualization of pitch. If the pitches were a perfect match the singer’s circle aligns with the note-circle. Much like on the piano, a sharp note rises higher and a flat note lower.

MACHINE LEARNING: TEACHING A COMPUTER, SO IT CAN TEACH YOU

Syng uses an open source machine learning model, called ml5.js pitch detection, to identify the frequency of sound in hertz from microphone input. I then programmed Syng to match the frequency to its corresponding musical notes.

USER TESTING

LEARNING WHAT WORKS AND WHAT DOESN’T

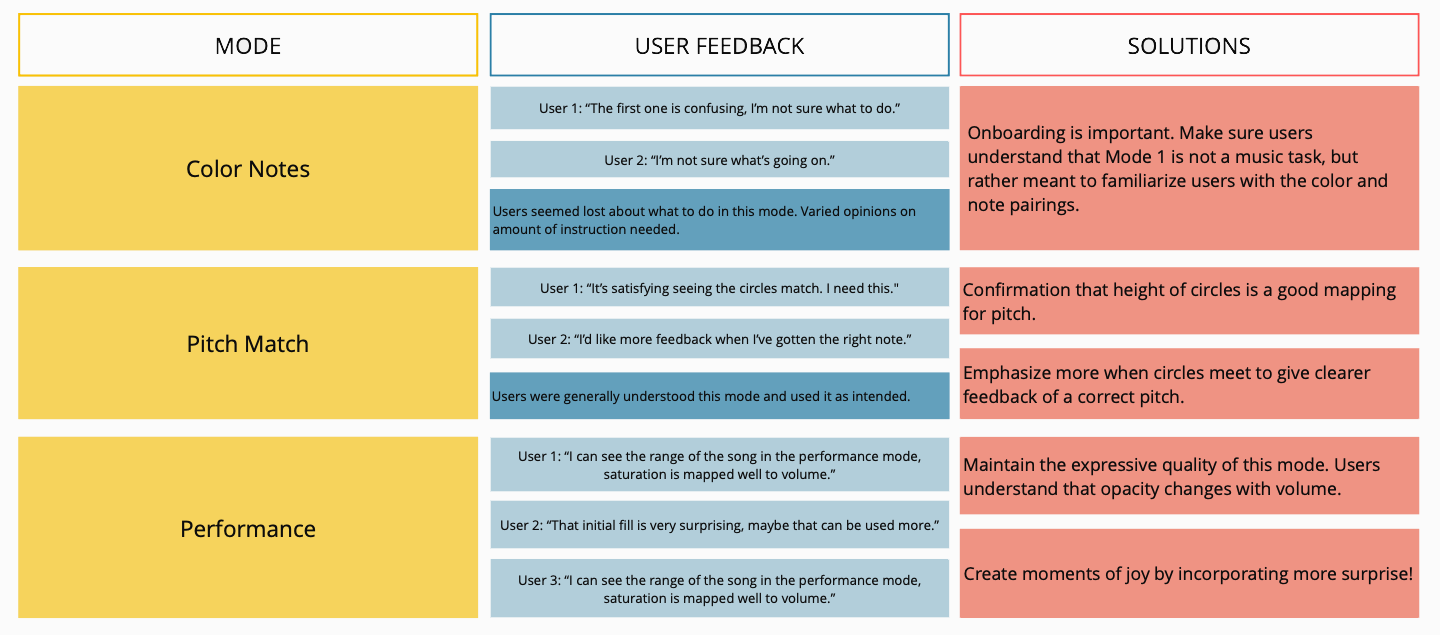

Users tested 3 prototypes of what would become the different phases of the final three-part web application.

FINAL PRODUCT

EXPERIENCE DESIGN THAT MARRIES INTERACTIVITY WITH VISUAL STYLE

Part 1: Intro

The intro establishes that throughout the whole experience, each note, regardless of octave, is paired to a particular colored circle.

Part 2: Pitch Match

Pitch Match is the ear training mode. Users can play a tone and sing it back with a visual cue of whether they are sharp, flat, or perfectly matched.

Part 3: Perform

This is a final freestyle mode that provides a more expressive visual while singing. It introduces the feature of opacity that changes with a vocalist's volume.