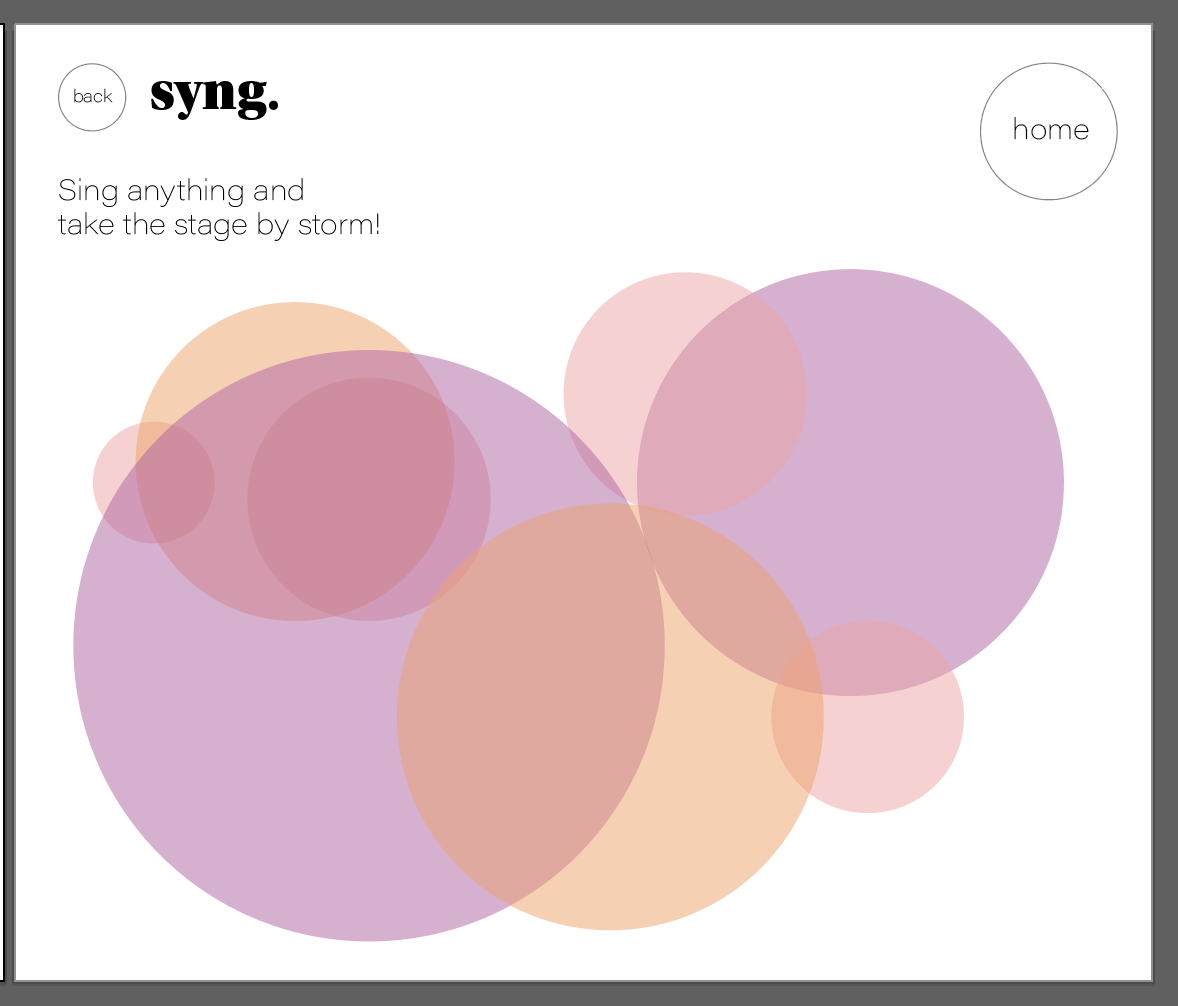

FUNCTIONALITY

My top priority was making this work. Are the mappings informative? Does it accomplish the goal (visualizing singing) in a meaningful way?

Color notes:

“The first one is confusing, I’m not sure what to do”

“I’m not sure what’s going on”

For the first mode people seemed a bit lost about what to do. There was a natural tendency to look for a pitch match scenario. Someone wanted less explanation of the task, another person wanted more, and most people didn’t really read the instructions.

Pitch Match:

“Pitch match is more effective for learning, maybe you need more explanation or a visual cue.”

“I’d like more feedback when I’ve gotten the right note”

“It’s satisfying seeing the circles match”

“i’m not that bad”

“I need this”

Pitch Match came across very straight forward and most people enjoyed it as a challenge. I liked watching people play the note many times to recenter themselves and try again. This is exactly how I intended it to be used.

Performance:

“there is good initial feedback in performance mode. It’s a bit fast”

“that initial fill is very surprising, maybe that can be used more”

“I can see the range of the song in the performance mode, saturation is mapped well to volume”

As expected this mode got a lot of feedback because it’s more expressive. People seemed to genuinely like the color to frequency mapping as well as the opacity changes based on volume. It was fun watching people get excited about singing a song when it was paired with the visuals, even country national anthems!

AESTHETICS

This project has a huge visual component so I took seriously any comments about improving its look.

“In the performance mode, maybe the graphics could be more feathery or painter-like”

“it should be as stylish as possible”

“circle buttons?”

“I like the color, its so beautiful”

My next step is to improve the navigation between these modes and make it resemble more of a website. I would love to add more modes, but will see how time allows. I feel better about it than I thought and am excited to make it polished.